Taking advantage of this week AWS re:Invent and next week BlackHat Europe, I wanted to push forward a new version of Prowler.

In case you are new to Prowler:

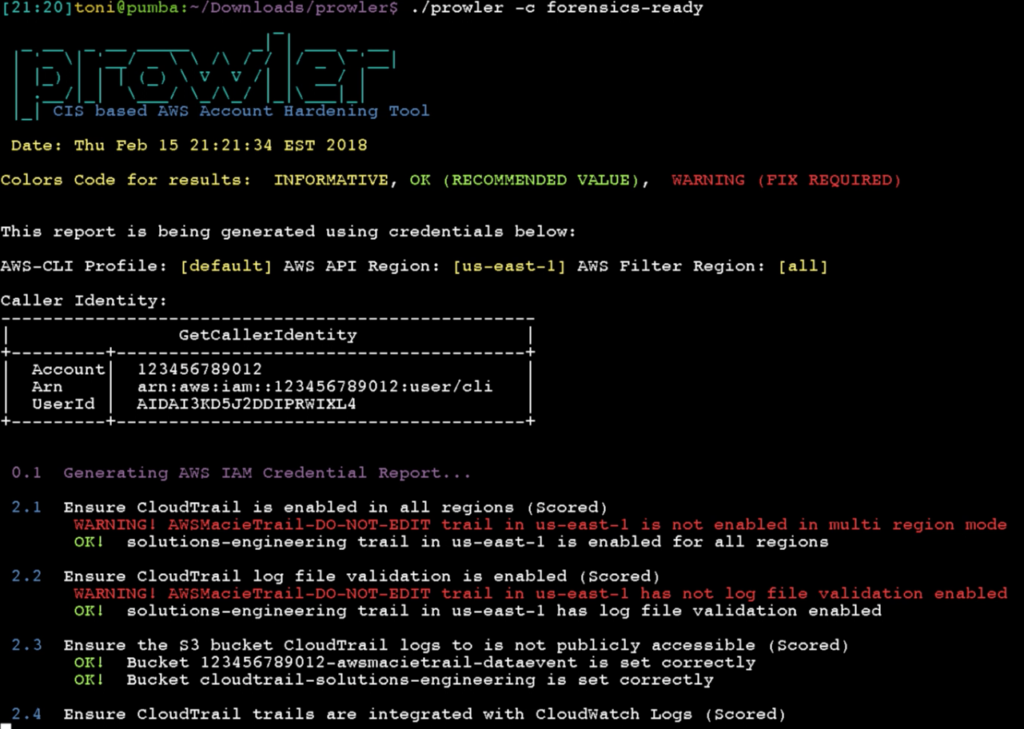

Prowler is an AWS Security Best Practices Assessment, Auditing, Hardening and Forensics Readiness Tool. It follows guidelines of the CIS Amazon Web Services Foundations Benchmark and DOZENS of additional checks including GDPR and HIPAA groups. Official CIS benchmark for AWS guide is here.

This new version has more than 20 new extra checks (of +90), including GDPR and HIPAA group of checks as for a reference to help organizations to check the status of their infrastructure regarding those regulations. Prowler has also been refactored to allow easier extensibility. Another important feature is the JSON output that allows Prowler to be integrated, for example, with Splunk or Wazuh (more about that soon!). For all details about what is new, fixes and improvements please see the release notes here: https://github.com/toniblyx/prowler/releases/tag/2.0

For me, personally, there are two main benefits of Prowler. First of all, it helps many organizations and individuals around the world to improve their security posture on AWS, and using just one easy and simple command, they realize what do they have to do and how to get started with their hardening. Second, I’m learning a lot about AWS, its API, features, limitations, differences between services and AWS security in general.

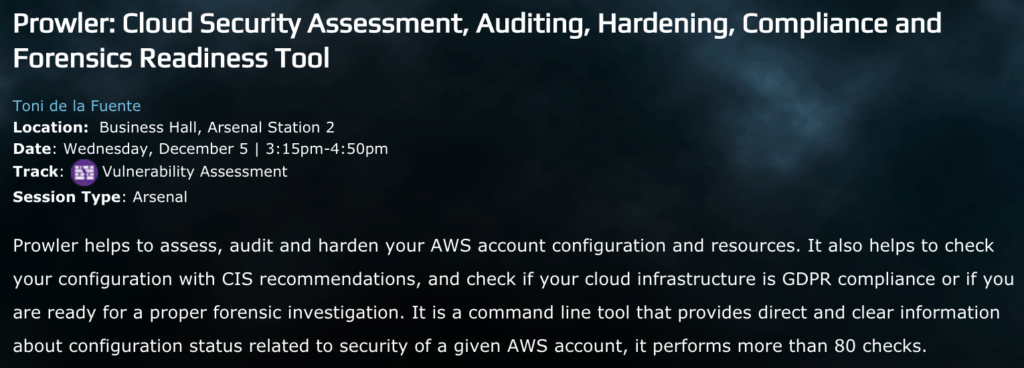

Said that, I’m so happy to present Prowler 2.0 in BlackHat Europe next week in London! It will be at the Arsenal

and I’ll talk about AWS security, and show all new features, how it works, how to take advantage of all checks and output methods and some other cool things. If you are around please come by and say hello, I’ve got a bunch of laptop sticklers! Here all details, Location: Business Hall, Arsenal Station 2. Date: Wednesday, December 5 | 3:15pm-4:50pm. Track: Vulnerability Assessment. Session Type: Arsenal

BIG THANKS!

I want to thank the Open Source community that has helped out since first day, almost a thousand stars in Github and more than 500 commits talk by itself. Prowler has become pretty popular out there and all the community support is awesome, it motivates me to keep up with improvements and features. Thanks to you all!!

Prowler future?

Main goals for future versions are: to improve speed and reporting, including switch base code to Python to support existing checks and new ones in any language.

If you are interested on helping out, don’t hesitate to reach out to me. \m/