SCAP (Security Content Automation Protocol)

SCAP (Security Content Automation Protocol) provides a mechanism to check configurations, vulnerability management and evaluate policy compliance for a variety of systems. One of the most popular implementations of SCAP is

OpenSCAP and it is very helpful for vulnerability assessment and also as hardening helper.

In this article I’m going to show you how to use OpenSCAP in 5 minutes (or less). We will create reports and also dynamically hardening a CentOS 7 server.

Installation for CentOS 7:

yum -y install openscap openscap-utils scap-security-guide

wget http://people.redhat.com/swells/scap-security-guide/RHEL/7/output/ssg-rhel7-ocil.xml -O /usr/share/xml/scap/ssg/content/ssg-rhel7-ocil.xml

Create a configuration assessment report in xccdf (eXtensible Configuration Checklist Description Format):

oscap xccdf eval --profile stig-rhel7-server-upstream \

--results $(hostname)-scap-results-$(date +%Y%m%d).xml \

--report $(hostname)-scap-report-$(date +%Y%m%d)-after.html \

--oval-results --fetch-remote-resources \

--cpe /usr/share/xml/scap/ssg/content/ssg-rhel7-cpe-dictionary.xml \

/usr/share/xml/scap/ssg/content/ssg-centos7-xccdf.xml

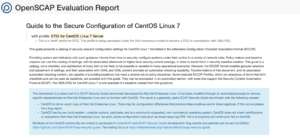

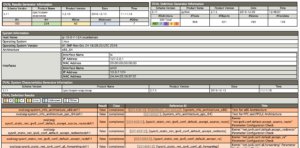

Now you can see your report, and it will be something like this (hostname.localdomain-scap-report-20161214.html):

See also different group rules considered:

You can go through the fails in red and see how to fix them manually or dynamically generate a bash script to fix them. Take a note of the Score number that your system got, it will be a reference after hardening.

In order to generate a script to fix all needed and harden the system (and improve the score), we need to know our report result-id, we can get it running this command using the results xml file:

export RESULTID=$(grep TestResult $(hostname)-scap-results-$(date +%Y%m%d).xml | awk -F\" '{ print $2 }')

Run oscap command to generate the fix script, we will call it fixer.sh:

oscap xccdf generate fix \

--result-id $RESULTID \

--output fixer.sh $(hostname)-scap-results-$(date +%Y%m%d).xml

chmod +x fixer.sh

Now you should have a fixer.sh script to fix all issues, open and edit it if needed. For instance, remember that the script will enable SELINUX and do lots of changes to Auditd configuration. If you have a different configuration you can run commands like bellow after running ./fixer.sh to keep SElinux permissive and in case you can change some actions of Auditd.

sed -i "s/^SELINUX=.*/SELINUX=permissive/g" /etc/selinux/config

sed -i "s/^space_left_action =.*/space_left_action = syslog/g" /etc/audit/auditd.conf

sed -i "s/^admin_space_left_action =.*/admin_space_left_action = syslog/g" /etc/audit/auditd.conf

Then you can build a new assessment report to see how much it improved your system hardening (note I added -after to the files name):

oscap xccdf eval --profile stig-rhel7-server-upstream \

--results $(hostname)-scap-results-$(date +%Y%m%d)-after.xml \

--report $(hostname)-scap-report-$(date +%Y%m%d)-after.html \

--oval-results --fetch-remote-resources \

--cpe /usr/share/xml/scap/ssg/content/ssg-rhel7-cpe-dictionary.xml \

/usr/share/xml/scap/ssg/content/ssg-centos7-xccdf.xml

Additionally, we can generate another evaluation report of the system in OVAL format (Open Vulnerability and Assessment Language):

oscap oval eval --results $(hostname)-oval-results-$(date +%Y%m%d).xml \

--report $(hostname)-oval-report-$(date +%Y%m%d).html \

/usr/share/xml/scap/ssg/content/ssg-rhel7-oval.xml

OVAL report will give you another view of your system status and configuration ir order to allow you improve it and follow up, making sure your environment reaches the level your organization requires.

Sample OVAL report:

Happy hardening!

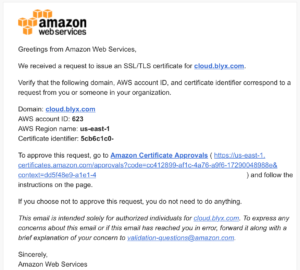

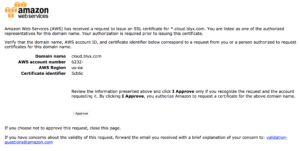

3. Once we have all DNS steps done, let’s create our wild card certificate with AWS Certificate Manager for *.cloud.blyx.com. Remember that to validate the certificate creation you will get an email from AWS and you will have to approve the request by following the instructions on the email:

3. Once we have all DNS steps done, let’s create our wild card certificate with AWS Certificate Manager for *.cloud.blyx.com. Remember that to validate the certificate creation you will get an email from AWS and you will have to approve the request by following the instructions on the email:

SCAP (Security Content Automation Protocol)

SCAP (Security Content Automation Protocol)

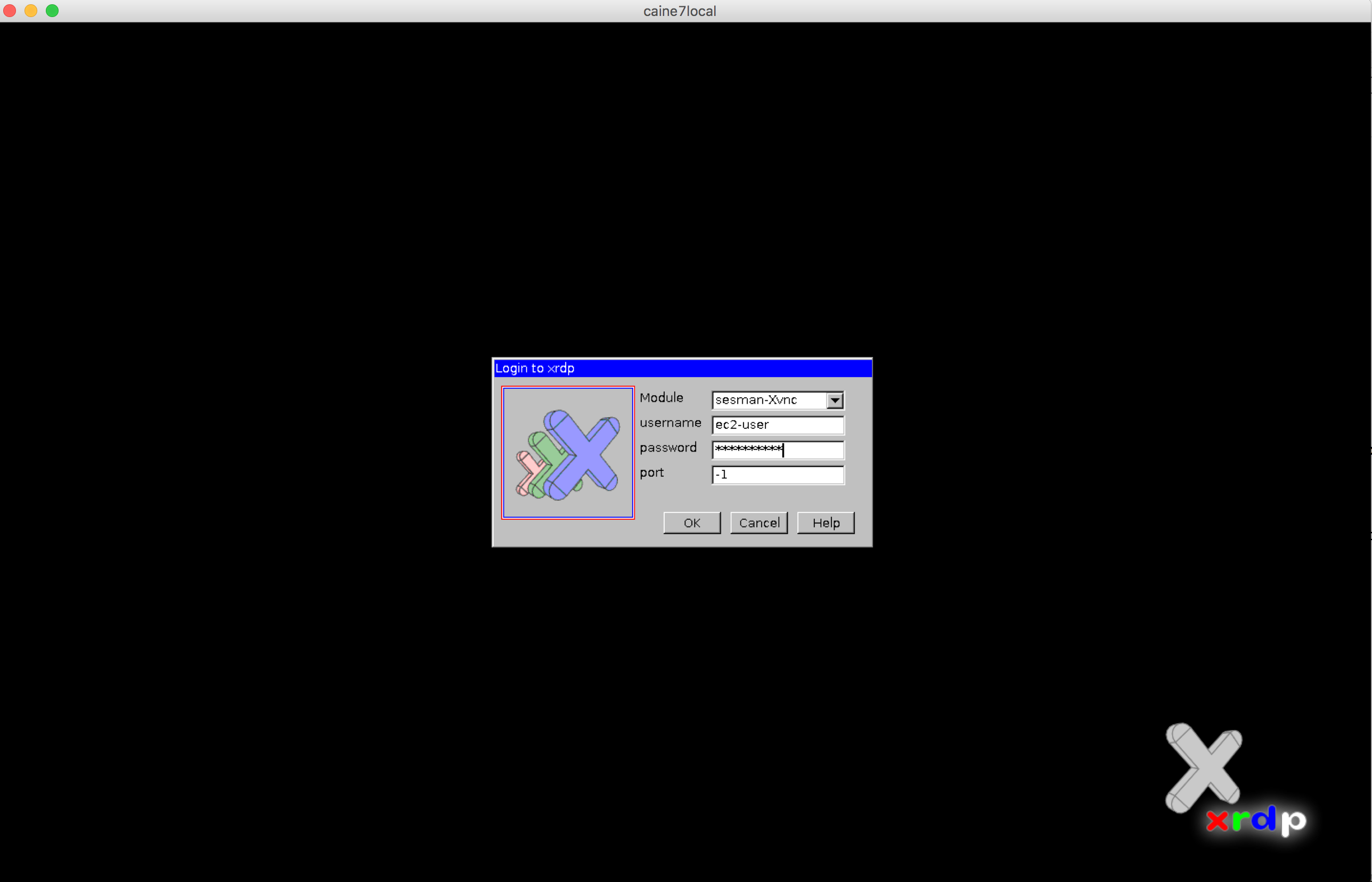

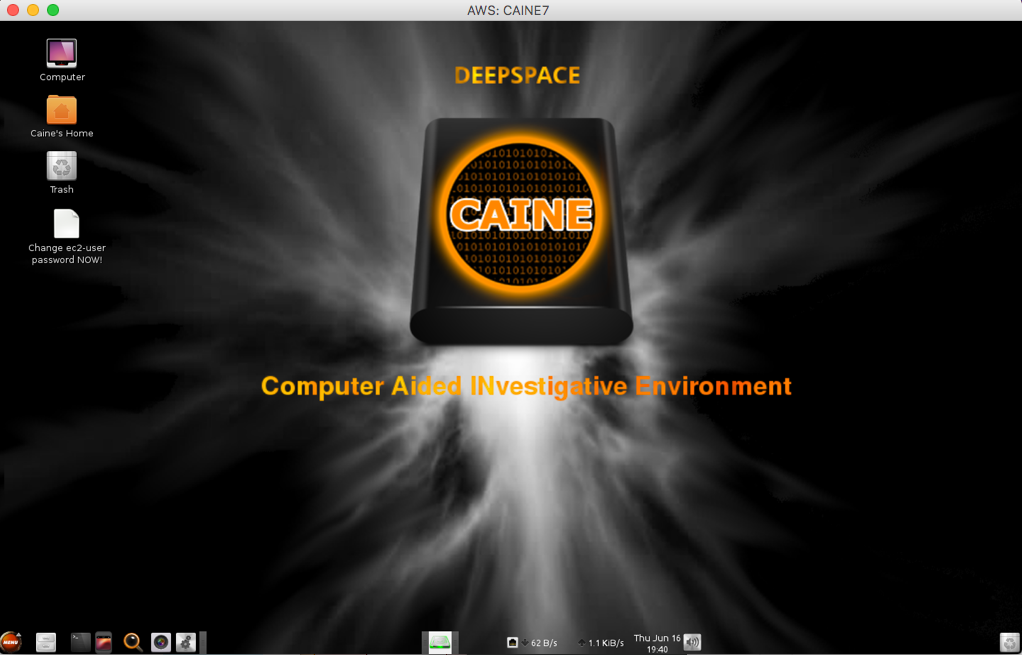

If you work with AWS, you may have to perform a forensics analisys at some point. As discussed in

If you work with AWS, you may have to perform a forensics analisys at some point. As discussed in

In order to give back to the Open Source community what we take from it (actually from the

In order to give back to the Open Source community what we take from it (actually from the  Now, with this provided CloudFormation template you can deploy SecurityMonkey pretty much production ready in a couple of minutes.

Now, with this provided CloudFormation template you can deploy SecurityMonkey pretty much production ready in a couple of minutes.